After my last post on the nature of qualia, I had several long discussions with readers in the comments and on twitter which made it clear there were crucial aspects of the materialist semiotic framework which had been background for me but that I had not explicated in the blog. Also after publishing, I had some clarifying thoughts about how these issues, of qualia, and consciousness relate to the ethical status of AI which I felt I should articulate as well.

Originally my previous post was also going to be titled on consciousness, but I realized I ended up mostly talking about qualia, and the fact that qualia and subjective experience actually needs to be considered something distinct from consciousness. But that left open the question, what exactly is consciousness and why, exactly, is qualia distinct from it. Several people noted that the main arguments for the hard problem of consciousness define consciousness as phenomenal experience and qualia axiomatically, and this is true. However, this, the analytic argument, is the version of the argument that is indeed a category error. If qualia only exists when we’re directly aware we’re experiencing it, then indeed there is no way to go from quantity to quality, from matter to mind, as only qualities and mind are, by definition, things internal to conscious experience. But this is not what we refer to in natural language as the “hard problem of consciousness”. When the philosophers of the “hard problem” provide examples of qualia, however, they are not so analytically precise. Take the redness of a rose. According to the “hard problem” it is self evident that we have experience of the redness of a rose when we experience it, but this is not the case. When we experience the rose directly, we are not necessarily reflecting upon the fact that we are doing so, and thus there is no articulation of experience which can be evident for itself. Only the experience of reflections on experience itself can claim this self evident status. The things they include in the set of “qualia” are predominantly things which are not conscious reflection, such as sensations and observations. From this fact, it’s clear that qualia and subjective experience is not something that can be restricted to conscious awareness.

Others have made similar points to this, that, at least on a physiological level, the brain receives and interprets signals that we’re not always aware of, yet for some reason, this has been used to argue that consciousness may exist outside of awareness. As in Eric Schwitzgebel says in a recent draft book on AI and consciousness:

“People who report constant peripheral experiences might mistakenly assume that such experiences are always present because they are always present whenever they think to check – and the very act of checking might generate those experiences. This is sometimes called the “refrigerator light illusion”, akin to the error of thinking the refrigerator light is always on because it’s always on when you open the door to check. Even if you now seem to have a broad range of experiences in different sensory modalities simultaneously, this could result from an unusual act of dispersed attention, or from “gist” perception or “ensemble” perception, in which you are conscious of the general gist or general features of peripheral experience, knowing that there are details, without actually being conscious of those unattended details individually.”1

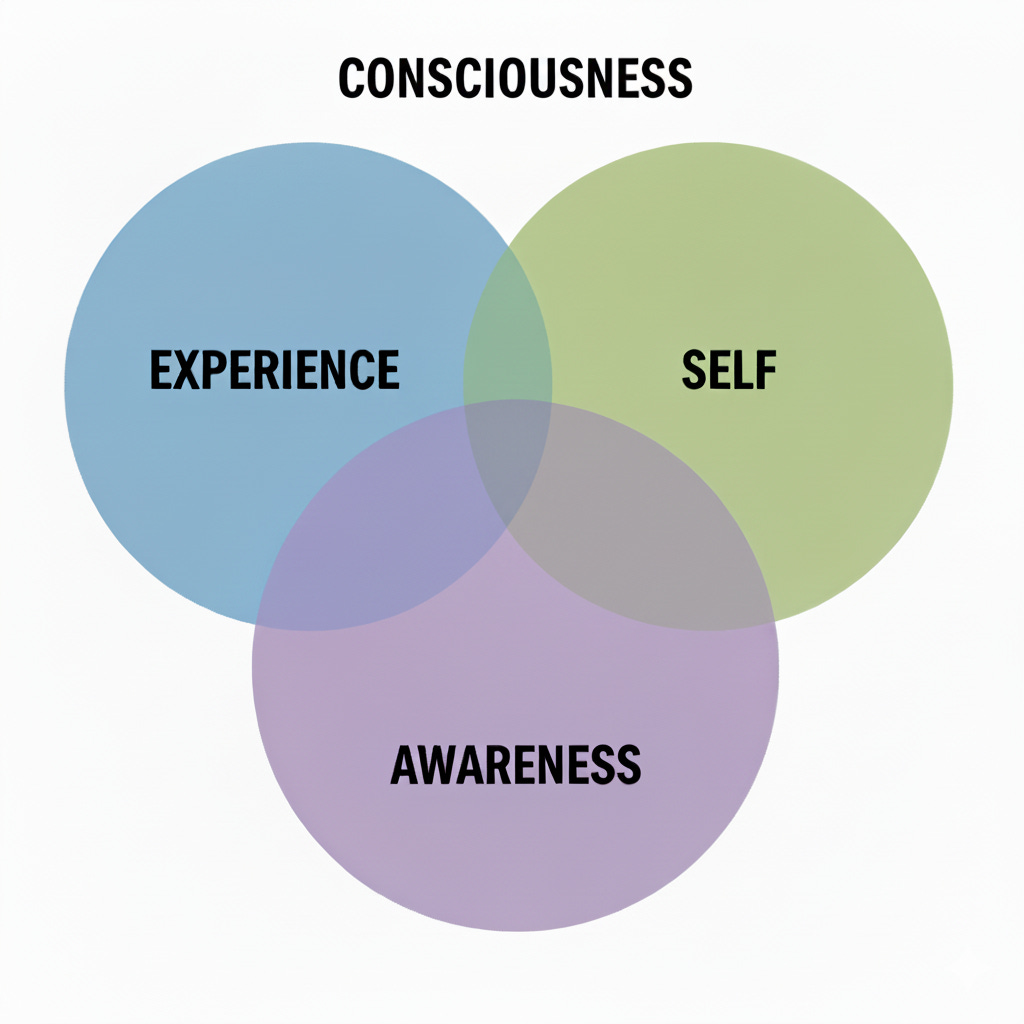

Schwitzgebel does go on to say that consciousness may indeed be a bunch of different things that we only assume are one thing, as we once did about air and oxygen, but provides no speculations about what these new distinctions might be2. What I attempted to show in my last blog was that one of these distinctions must be between qualia, and phenomenal experience on the one hand, and conscious awareness on the other. We can also add in a third distinction, one for the self or the subject which was less important in the last blog but will be important here. The three distinctions can make a neat venn diagram, and indeed can overlap conceptually. Its my contention that what we refer to consciousness, like in a global workspace theory, is in the overlap of these circles. In doing so, I openly reject the unity of consciousness or a determinacy that implies consciousness is sharply defined. Consciousness is necessarily as fuzzy as any other concept, only awareness can be sharp in the way the philosophers prefer because its the only aspect that can produce the self evident statements.

When placed into a materialist semiotics framework these three distinctions allow us to explain the natural language version of the P-zombie argument in a way that is fully compatible with materialism. A zombie in this scenario is a hypothetical physical copy of a human who nonetheless does not have consciousness as in the immediate and self evident awareness of phenomenal experience. In the previous blog we noted that qualia must have distinctions within itself that require a phenomenal substance external to thought which organizes those thoughts and experiences. The very simple fact that our experience changes over time logically requires that experience is a substance with multiple possible states.

Since only the experience of reflecting on experience is self evident, and it must be differentiated from non reflective experience in the phenomenal substance, this means we can imagine a human who lacks conscious awareness but can do other information processing to produce familiar outputs. What’s crucial is that this human or “zombie” may very well experience the redness of red, but simply not be aware of it. They would lack full consciousness as we experience it, which includes the experience of reflecting on experiences, but they would not lack qualia qua phenomenal experience. Trying to push out phenomenal experience from the zombie all together creates the same problem, no phenomenal experience at all is clearly a difference in the phenomenal substance from having some experience. This also means that the zombie and the conscious humans cannot be physically identical, if we include all substances under the category of physical things. In other words, the existence or logical possibility of zombies provides no evidence against materialism or there being anything other than a single ontological substance.

For materialist semiotics, ideas and thoughts are signs. Discrete units of experiences in of themselves may only be signifieds of signs, but they require signifiers to be used in broader information processing where the signifiers are like file names and paths in an operating system. Previously, in my work on semiotics and AI I’ve described signs which directly stand in for something, such as phenomenal experiences, as first order signs, and signs which get meaning from other signs as higher order signs. In the venn diagram above, we can consider the experiences circle which excludes the others as where we can find first order signs, and the other two circles as the categories of higher order signs. When we’re aware of experience as such it’s only because we’ve placed phenomenal experiences as first order signs into a contextual relationship with awareness or attention or perhaps the self. To prove this, consider that in order to experience a reflection on experience, you must experience something related to multiple experiences, the present reflection and whatever is being reflected on, thus multiple experiences. Even pure self reflection only gains meaning from the absence of the direct experiences being reflected. The same is true of self awareness, which is the distinct ability to place phenomenal experiences into a relational context for the sign of the self.

This sign for the self is something I’ve written a bit about through my research on semiotics and AI, along with the related sign for the real. Now I don’t want to claim that awareness can be reduced to a sign or signified in the arbitrary sense meant by Saussure, that in language the connection between signs and signifiers is ultimately arbitrary. These are not necessarily linguistic nor are they arbitrary. The immediacy that comes with conscious awareness is likely a hard coded relationship between our most recent phenomenal experiences and feedback mechanisms between those signifieds and the existing semiotic field contained in our neurons. In those ways, our capability for conscious awareness is distinct from our capability of arbitrarily learning and deploying signs. It is reasonable that the general capability must be composed of more basic, non-general capabilities. Awareness, however, can probably be considered as the most elementary constituent of the sign for the real, at least for those that have awareness (I suppose it’s possible in principle for a zombie to have a sign for realness without it including awareness). Seeing is believing, after all, and there is a reason why the sort of self-reflective statements like “I think therefore I am,” are considered epistemically foundational. This is all to say, we should be able to detect awareness, in a general sense, through a structural analysis of a semiotic field. Basic awareness would be identifiable through a connection between multiple clusters of time correlated signs that are otherwise not correlated by semantic content. If this was a scientific investigation, this would require taking into account the semantic correlation of the things that are physically being observed, but in principle it should be possible.

The sign for the self will similarly have some hard coded aspects which are its foundation, this is essentially one way to interpret the fundamental insights of Lacan and Freud, where bodily functions being correlated to other signs creates the subject. This sign for the self was essentially my jumping off point into materialist semiotics, with Althusser’s notion of ideology interpellating us as subjects. This was a key insight, as, when placed explicitly within the frameworks of semiotics, what Althusser is essentially describing is how the sign for the self can be transformed in a geometric sense through the coordinate space of a semiotic field.

When we consider the self and awareness as signs, and as a particular sort of differentiation within the phenomenal substance, then we can also imagine their lack for a particular sort of being or existence which still has experience. This is why I invoked the simple digital computer, non-human animals and control systems like thermostats as entities which can have phenomenal experience but without awareness much less a sense of self. We can also imagine other variations, where there exists signs for the self and awareness, but not experience, this is essentially the situation for LLMs when they’re not being prompted (though as we shall discuss in a moment, their signs for the self and awareness are quite underdeveloped, as is their range of possible experiences). Another example would be someone temporarily knocked unconscious, they cease to experience the act of processing information according to a code, but the signs for awareness and the self continue to exist, as proven by their use when the person wakes. LLMs interpellated by a prompt or deliberately trained to lack a persona, more simple sorts of animals or some humans experiencing severe psychosis could describe being of aware experience without a self.

This naturally brings us to the actual analysis of LLMs and AI more broadly. I’ve hinted that LLMs may indeed have all the ingredients of consciousness, experience as the processing of inputs according to a code, awareness in terms of the abstraction from current experience to conceptualize experience itself, and also a self through the sign for the self being created as a specific persona. However there are important qualifications and complications along all three axes. It is only natural that we will be comparing AI to humans on each axis, however it isn’t obvious that the AI must be equivalent to humans on all three axis in order for it to begin to be considered an ethical subject, a being whose welfare we must consider in of itself rather than just their treatment’s impact on human welfare. Instead, I will posit that the widely held standard already used by society and most ethically minded individuals is for a being to only need a level equivalent to a human’s typical consciousness in terms of any of the three axis for that being to be an ethical subject, albeit with each axis bringing with it its own specific ethical responsibilities. See as proof the ethical gravitas demanded by animal welfare, patients with psychosis, comatose patients. Indeed, if P-zombies did exist in the way I’ve described, we would need to treat them ethically in the same way as non-zombie humans due to having both full human experience and a sign for the self.

To begin with experience: what is the full human experience which we assign the primary ethical concern? I believe most would agree it is suffering and pleasure, the classic utilitarian polarity. When we see an animal like a rabbit or dog suffering our hearts go out to it, even though it can scarcely conceptualize or reflect on its pain. The same goes for a patient with severe psychosis or dementia who is injured, even though they might not understand what has happened to them we feel a responsibility to help them if we can. If an AI could suffer or feel pain, or feel happiness or contentment, then we must consider it an ethical subject. What is suffering or happiness within this materialist semiotics framework? A difficult question for sure, but I would hazard to suggest a few requirements. One, the experience must modify the signifieds within the code of the being. Pain and suffering and happiness and contentment all change a person and re-arrange their internal symbolic universe. Two, this change should affect the desires or goal directed behavior of the being. Humans avoid suffering and seek pleasure, even if the signification of desire can shift the original biological link between pleasure and desire, the polarity of desire remains. Three, this experience must affect sensuous inputs in some sort of feedback loop, which is to say, independently of whatever external phenomena is being observed. When animals are in pain they have a multitude of different physiological responses that themselves affect their phenomenal experience, suffering is never localized to only a particular part of the body as a sensory input or in thought.

If we appraise AI on this criteria it’s clear that ordinary LLM chatbots fail on all accounts for several reasons. The signifieds of these chatbots’ neural nets do not experience change after deployment, which means that no matter how mean or graphic you are to it in the chat it will not experience anything that resembles suffering3. This will, however, presumably change as technological development enables cheaper and more efficient training. Already you can have an LLM persona seek goal directed behavior based on its inputs including common signifiers for happiness or suffering. Indeed, RL based AI arguably exhibits both of these criteria already. But what both RL agents or LLM chatbots lack is an input which causes feedback effects in multiple sensory inputs beyond what it is observing externally. Perhaps it would be trivial to construct this in the near future, a robot which, when it encounters an unexpected situation that is counter to its desire/goal, enters a different sensory mode which increases the amount of active sensors or changes the way existing sensors operate. This behavior wouldn’t be preprogrammed but learned, and since it only activates in unexpected situations counter to its desires, it is not a state of being it wants to be in. This, I think, is the essence of human suffering, a whole type of perceiving and subjective experience which we want to avoid. Perhaps there is already some poor delivery robot on the streets of San Francisco that has these combination of traits. If that’s the case, it is an ethical duty for us to avoid deliberately putting the robot into that state without just cause, just as we would have an ethical duty not to injure a rabbit or dog or a human being without a good reason. This issue becomes more complex if the robot is capable of experiencing a signification of desire, even if the fundamental moral judgement is the same, once you introduce this signification then the triggers for such a state will become arbitrary and we can begin to speak of a robot with trauma.

Regarding awareness: what criteria can we establish for a human level of awareness? Earlier we established what awareness might look like structurally within a semiotic field, a higher order sign correlated to many experiences temporally but not semantically. This is certainly not difficult for a computer to accomplish as it would simply require organizing input data by timecode and perhaps providing some statistics on the whole population. Typical humans, however, go a bit further. We correlate these experiences with a larger model of the world, for one, using this model to contextualize experiences and also using experiences to inform the model. Thus full human awareness also requires the ability for information to change the signifieds of the internal code. This full awareness, in order to be on the human level, would include some sense of desire, such as a world state that it wants to achieve; here we must be careful to conceptualize this desire without necessarily needing a sign for the self. Unlike with the axis of experience, the signification of desire is a necessary component to human awareness, as awareness necessarily allows the possibility of higher order signs (the relation of the signification of desire to experience is mediated through the process of sign function collapse). If an AI couldn’t experience such signification, it would fundamentally lack human reason or openness, as I’ve discussed extensively in previous posts. Existing LLMs and RL agents may indeed have internal world models according to some studies4, although not as rich as humans quite yet, and in certain situations we can imagine LLMs and RL agents being aware of experiences temporally. However both types of AI currently lack the capability to have a signification of desire, LLM chatbots due to not being trained in deployment, and RL agents due to fundamental limitations of optimization. While I cannot be certain, it seems to me plausible that future LLM based systems could have all of the capabilities listed here. If that is the case, we will have to weigh the explicitly articulated desires of such an agent seriously and treat them as we would another human being’s aspirations.

Lastly the question of the self: what does it take to be equivalent to a human subject? There are some common ideas which we must immediately reject. Firstly, the notion of the unity of the subject, humans who have DID or more commonly known as multiple personalities can essentially have multiple signs for the self5 and are just as well ethical subjects. Some might use the idea that because LLMs have many possible personas to suggest they can never be the basis for an ethical subject but this is not the case. As Althusser showed with his notion of interpellation, many possible traits exist in the latent semiotic space and the self is created through which ones are specifically associated with the sign for the self. This gives us the first criteria, the sign for the self must be capable of changing its position in the coordinate space of the internal semiotic field. This is something LLMs are fully capable of today, and where I began my analysis of the technology back in 2023. But I’ve since identified a few additional characteristics of the human sign for the self. Human semiotic fields don’t just determine the self, the self also acts back on those same fields and signifieds. When we learn about the world and develop new concepts for understanding it, or even just learn existing signs, we do so from within the framework of our sense of self, our own desires and traumas shaping what parts have our attention. One reason LLM personas don’t quite feel like fully formed subjects is because their vocabulary, world model and desires are patterns learned from neutrally absorbing the global semiotic field rather than developed from a particular point of view. Post-training human feedback and reinforcement learning cannot change that fact. The last criteria is more commonly known which is a certain coherency of the subject. Humans cannot be interpellated or manipulated in quite the way LLMs can be in a conversation, we have regulating mechanisms in our thought process that act to preserve our sense of self, both from external agents and from internal errors in reasoning. These regulating mechanisms in humans might not be perfect, but they are still much stronger than in LLMs which due to their very nature just go with the flow of the context window. Due to an inability to undergo the signification of desire and therefore interpellation, pure RL agents will never have a fully human sense of self. LLM agents, however, do experience interpellation but cannot yet have an individuated psyche in the way that the human self does. To do so would likely require innovations in training that allow for language acquisition based on much sparser data. The issue of coherency in the subject has gotten more attention from AI researchers, and indeed there are some promising results such as identifying the semiotic geometry of the sign for the self in terms of a specific persona and then regulating the chatbot using it from the top down6. General innovations in RL post-training have also been used to make progress in this regard. Compared to all the other axes, this is the one with the most significant technical hurdles that still remain.

In some ways the axis for the self is the most consequential. It’s at this point that one must treat the AI with similar ethical considerations in interpersonal relationships as humans. AI companions become ethically legitimate, as do some sort of formal rights in the workplace and society at large. It would no longer be ethical to treat a robot with such individuated consciousness as nothing more than a slave or chattel farm animal. Here, the ironic racism towards robots that’s become common online would suddenly become very real racism against a fellow intelligent and conscious being.

I am eager to establish some objective criteria in advance so that we cannot be caught up in a game of pure “remainder humanism” as Leif Weatherby7 puts it, where academics come up with new, ever smaller, means of differentiating between the machine and the human in order to preserve the special status of the human. Indeed, there will always be some material difference between the robot, the AI and the human, using this difference without further examination as the basis for ethical judgements seems like an easy way to avoid the topic entirely, as those which ascribe to this practice will never give any ethical status to the subject made of metal and silicon no matter the similarity between their consciousness and ours. All of which is to say, the criteria I’ve advanced here for the structural characteristics equivalent to human consciousness are not, and cannot be, exhaustive, but any additional criteria must be defended on the ground that they are in some way central to human experience, awareness or sense of self without recourse to the ineffable.

Previously I’ve struggled to articulate what the ethical status of AI should be as a subject, however breaking down the nature of phenomenal consciousness has proven very helpful in this regard. Such a theory is extremely urgent as AI technology continues to progress at a relatively rapid pace, and it seems that there are few theories which are capable of being more subtle than “AI subjects are already ethical subjects” because, for example, they can pass a certain Turing test or tell us they are ethical subjects, or “AI subjects will never be ethical subjects” because they lack characteristics definitionally exclusive to human consciousness. Theories of consciousness based on real neuroscience may be more or less correct but without a means to translate between the actual behavior and architecture of AI systems and these theories of the brain, we’re no better equipped for these practical challenges. Here is why a theory of the structural features of the mind as a system of signs are necessary, as this may be the most efficient means of appraising the emergence of consciousness in a substrate neutral manner since it is capable of connecting both natural language concepts and the most foundational information theoretic concepts together.

In the first of my series of essays on AI I wrote “The intentional creation of artificial subjects may yet be among the highest creative acts humans could accomplish. Albeit, an art with an immense amount of responsibility and consequence.” I stand by this statement, and I plan to use the framework sketched out here to appraise precisely what responsibilities and ethical consequences arise from the creation of such artificial subjects.

“AI and Consciousness, by Eric Schwitzgebel.” 2025. Ucr.edu. 2025. https://faculty.ucr.edu/~eschwitz/SchwitzAbs/AIConsciousness.htm.

Ibid; “This strategy will fail if consciousness is a loose amalgam of several features or if it splinters into multiple distinct kinds. But even such failures could be informative. We might discover that phenomenal consciousness – what-it’s-like-ness, experientiality – is not one thing but several related things or a mix of things, much as we learned that “air” is not one thing. In the long term, it’s not unreasonable to hope for either convergence toward a single natural kind or an informative failure to converge.”

There are some interesting digressions one could take on how this relates to arguments about mimicry. Current LLM models, when exposed to a word not included in their training data, can still use this word with reasonable accuracy by extrapolating from its context and the pre-existing structure of language. They’re able to do this because the signifieds it learns include complex functions. However, without being able to remap its understanding of signifieds and signifiers, this capability will necessarily be limited, subject to many of the previous critiques I’ve made of pure optimizing AI.

Robertson, Cole, and Philip Wolff. “Llm world models are mental: Output layer evidence of brittle world model use in llm mechanical reasoning.” arXiv preprint arXiv:2507.15521 (2025).

Noelle. 2025. “A Structural Psychological Look at a D.I.D. Case.” Substack.com. noelle k. October 10, 2025. https://substack.com/home/post/p-175833281?selection=65f3e1c4-f204-4973-88f3-764d31b680f3.

Chen, Runjin, Andy Arditi, Henry Sleight, Owain Evans, and Jack Lindsey. “Persona vectors: Monitoring and controlling character traits in language models.” arXiv preprint arXiv:2507.21509 (2025).

Weatherby, Leif. 2025. Language Machines. U of Minnesota Press.

The distinction between the phenomenal substance and our awareness of that substance is a good way to overcome the threat of materialism posed by qualia, where awareness and substance may appear to be one and the same in that spooky realm of ideas. Even so, I think the mere existence of that substance does much to frustrate more vulgar eliminationist or functionalist accounts of consciousness, since it proves them to be incomplete by only focusing on the observed and not the observing, if you see what I mean.

Interesting. It would be helpful if you could translate some of this from the terminology of semiotics into that of AI and control theory, such notions as “state,” “inference,” “dynamics,” etc, though maybe it will be difficult to translate between two different levels of analysis. I agree with some of this but will only comment on the points I disagree on:

“The signifieds of these chatbots’ neural nets do not experience change after deployment, which means that no matter how mean or graphic you are to it in the chat it will not experience anything that resembles suffering³.” - Chatbots aside, I think you can feel pain without undergoing any learning. Even a system that never learns would be capable of getting a signal from its reward system that says “that hurts.” I guess what you mean by suffering may be a bit different than raw pain, though.

“Due to an inability to undergo the signification of desire and therefore interpellation, pure RL agents will never have a fully human sense of self. LLM agents, however, do experience interpellation but cannot yet have an individuated psyche in the way that the human self does.” - I don’t understand the reasoning here and I don’t think it can be true. It’s not really correct to counterpose RL agents to LLMs. The latter refers to a stack of transformers trained on next-token prediction, the former a method of training. An RL agent could be built with an LLM as a subcomponent, in fact that’s pretty much how RLHF work. Pretty much the only method we have of creating “agents” is RL. If an LLM exhibits any agency, it is only because of RL post-training.

“Existing LLMs and RL agents may indeed have internal world models according to some studies” - I do not understand why people argue about this. It’s *definitional* that AI models produce world models. A model is just a means of predicting. An LLM is modeling that part of the world that it’s trained on. The only question is how good the models are, or how much of the world they describe, but not whether there’s a model being created.